Ethereum creator Vitalik Buterin is digging into a new concept for how modern computing can be split into two parts: a “glue” component and a “coprocessor.”

The idea here is simple: divide the work. The glue does the general, not-so-intense tasks, while the coprocessor takes care of the heavy, structured computations.

Vitalik breaks it down for us, saying that most computations in systems like the Ethereum Virtual Machine (EVM) are already split this way. Some parts of the process need high efficiency, while others are more flexible but less efficient.

Take Ethereum, for example. In a recent transaction where Vitalik updated his blog’s IPFS hash on the Ethereum Name Service (ENS), the gas consumption was spread across different tasks. The transaction burned through a total of 46,924 gas.

The breakdown looks like this: 21,000 gas for the base cost, 1,556 for calldata, and 24,368 for EVM execution. Specific operations like SLOAD and SSTORE consumed 6,400 and 10,100 gas, respectively. LOG operations took 2,149 gas, and the rest was eaten up by miscellaneous processes.

Vitalik says that around 85% of the gas in that transaction went to a few heavy operations—like storage reads and writes, logging, and cryptography.

The rest was what he calls “business logic,” the simpler, higher-level stuff, like processing the data that dictates what record to update.

Vitalik also points out that you can see the same thing in AI models written in Python. For instance, when running a forward pass in a transformer model, most of the work is done by vectorized operations, like matrix multiplication.

These operations are usually written in optimized code, often CUDA running on GPUs. The high-level logic, though, is in Python—a general but slow language that only touches a small part of the total computational cost.

The Ethereum developer also thinks this pattern is becoming more common in modern programmable cryptography, like SNARKs.

He points to trends in STARK proving, where teams are building general-purpose provers for minimal virtual machines like RISC-V.

Any program that needs proving can be compiled into RISC-V, and the prover proves the RISC-V execution. This setup is convenient, but it comes with overhead. Programmable cryptography is already expensive, and adding the cost of running code inside a RISC-V interpreter is a lot.

So, what do developers do? They hack around the problem. They identify the specific, expensive operations that take up most of the computation—like hashes and signatures—and create specialized modules to prove these operations efficiently.

Then they combine the general RISC-V proving system with these efficient, specialized systems, getting the best of both worlds. This approach, Vitalik notes, will likely be seen in other areas of cryptography, like multi-party computation (MPC) and fully homomorphic encryption (FHE).

Where glue and coprocessor come in

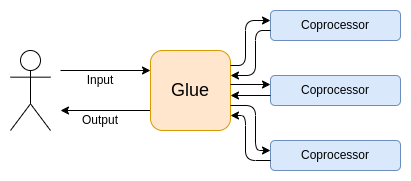

According to Vitalik, what we’re seeing is the rise of a “glue and coprocessor” architecture in computing. The glue is general and slow, responsible for handling data between one or more coprocessors, which are specialized and fast. GPUs and ASICs are perfect examples of coprocessors.

They’re less general than CPUs but way more efficient for certain tasks. The tricky part is finding the right balance between generality and efficiency.

In Ethereum, the EVM doesn’t need to be efficient, it just needs to be familiar. By adding the right coprocessors or precompiles, you can make an inefficient VM almost as effective as a natively efficient one.

But what if this didn’t matter? What if we accepted that open chips would be slower and used glue and coprocessor architecture to compensate?

The idea is that you could design a main chip optimized for security and open-source design while using proprietary ASIC modules for the most intensive computations.

Sensitive tasks could be handled by the secure main chip, while the heavy lifting, like AI processing or ZK-proving, could be offloaded to the ASIC modules.

cryptopolitan.com

cryptopolitan.com