If last year was defined by groundbreaking AI models with impressive conversational abilities, many think 2025 may be the year of AI agents—autonomous systems designed to perform specific tasks with minimal human guidance.

These specialized tools go beyond simple chat interfaces, autonomously executing different tasks that go beyond mere content generation.

The research agent hype gained momentum when You.com introduced its pioneering research tool in late 2024.

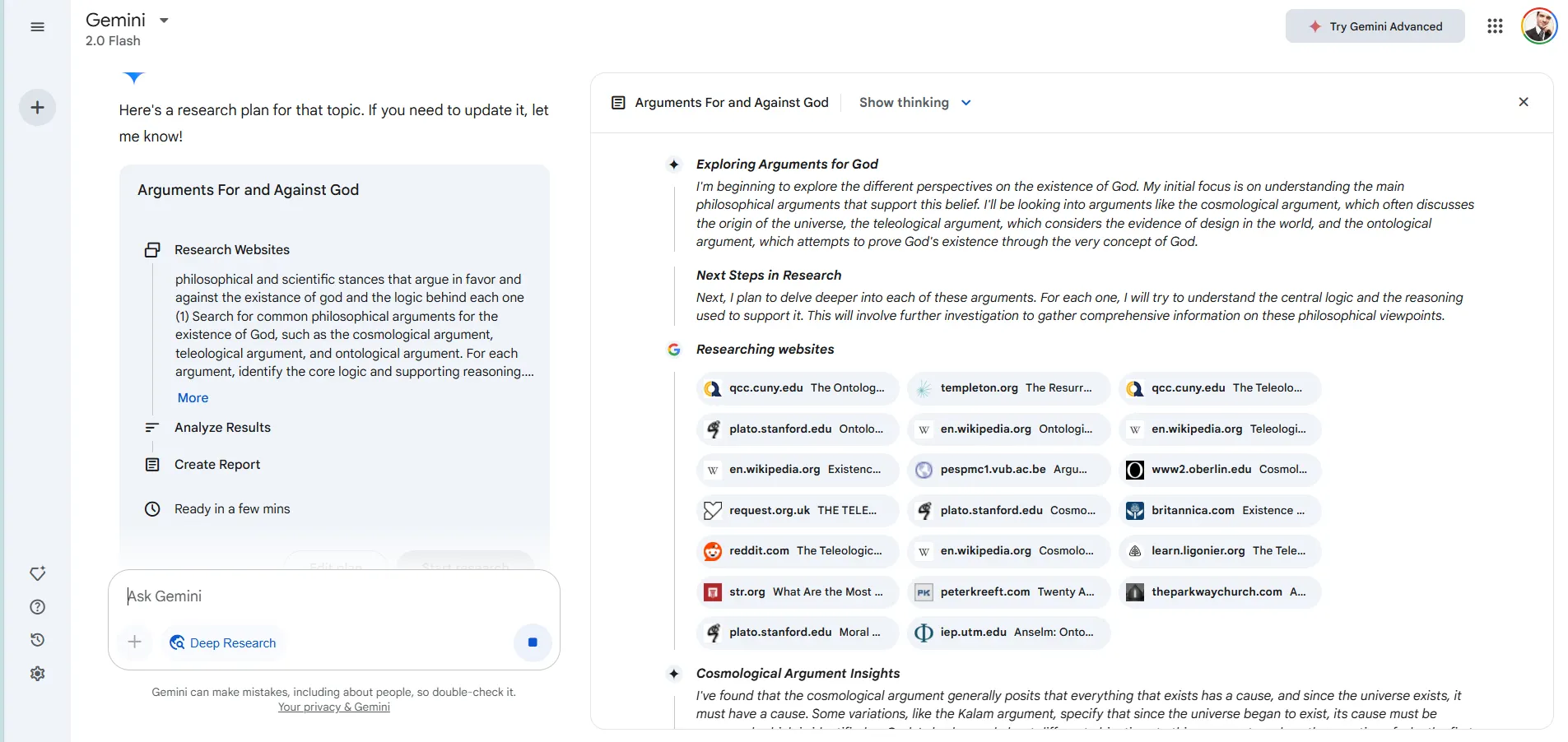

Google quickly responded with Gemini's research agent, capable of generating comprehensive, citation-rich analyses spanning dozens of pages, making it available for Gemini Advanced users at $20 a month.

OpenAI entered the competition with its GPT-4.5-powered research assistant in February, while Elon Musk's xAI unveiled deep research capabilities in Grok-3 a few days later.

Now, Grok and Gemini offer their research agents for free, while OpenAI charges $20 for 10 monthly users in its Plus tier and $200 for 120 monthly users in its Pro tier.

But which one actually delivers the most useful results? We tested all the agents to evaluate how these digital research companions perform when tackling identical challenges.

(Note: All the results are in our GitHub repository.)

Preparation Before Research

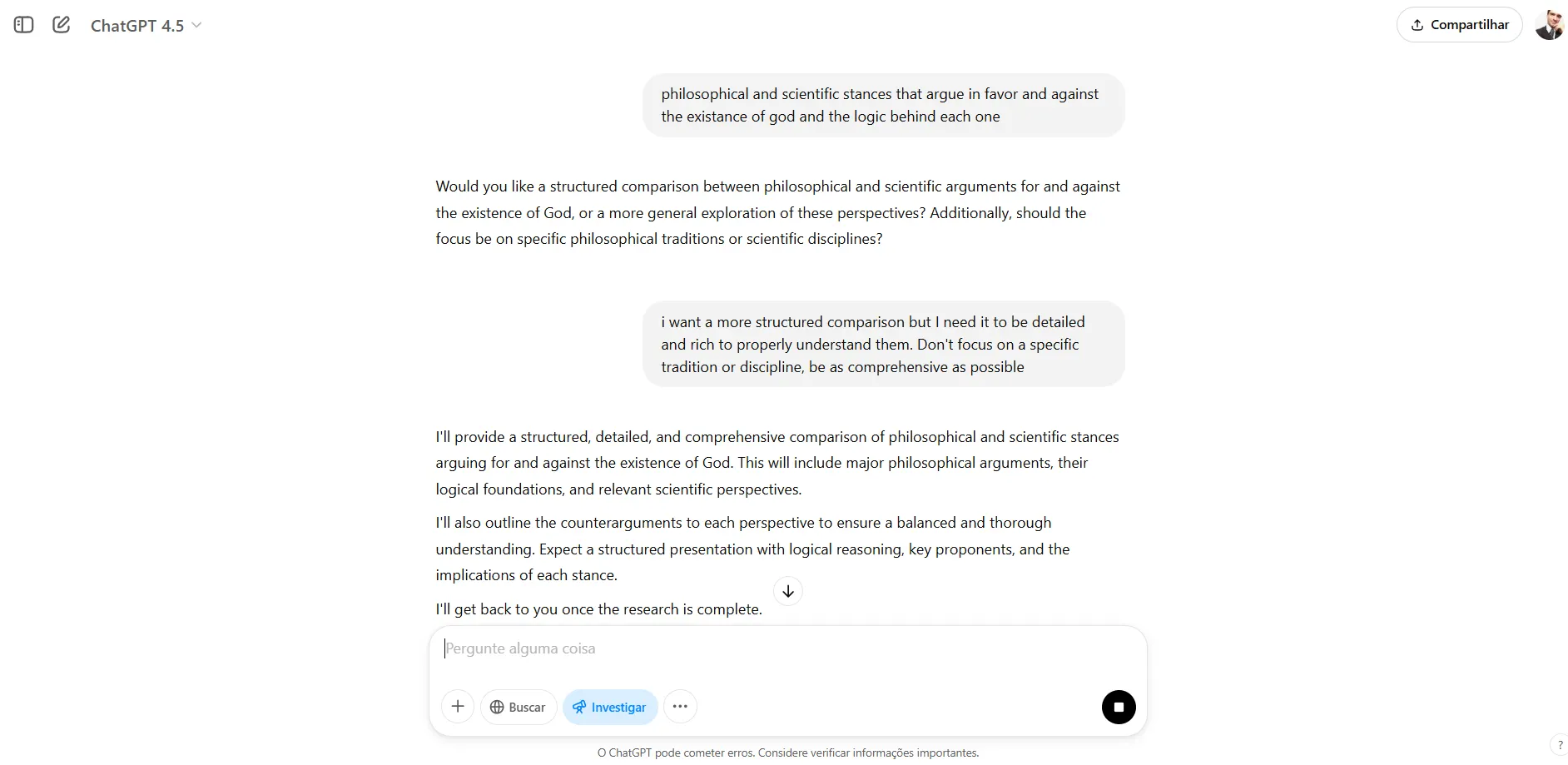

The moment you task these AI systems with research, their unique personalities become apparent.

ChatGPT takes a cautious, methodical approach, asking clarifying questions before proceeding. This cautious approach is suitable to minimize hallucinations and maximize relevance by first establishing precise parameters around user intent.

It also helps the model avoid going down blind alleys and reaching wrong conclusions.

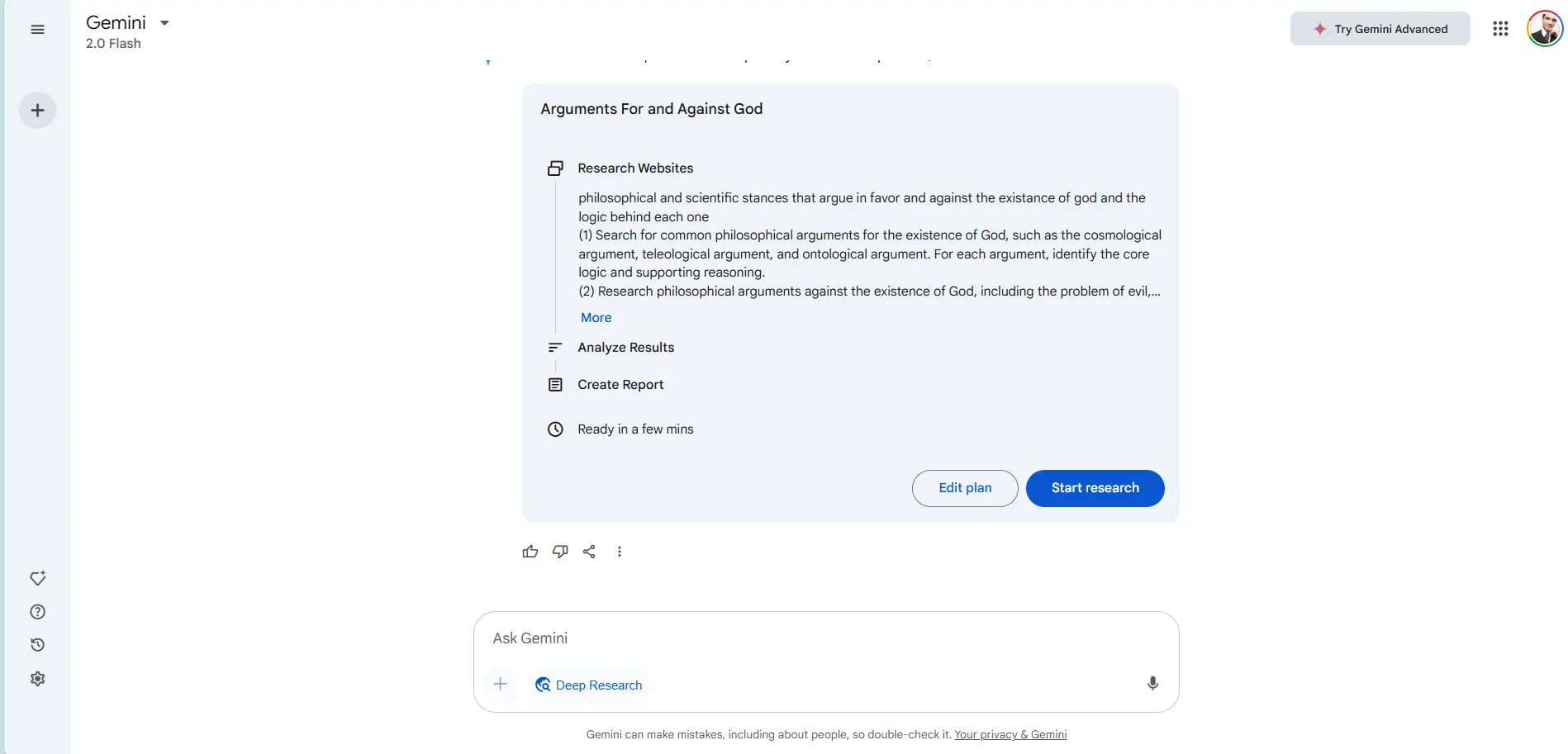

Gemini is less obvious and instead operates more like a collaborative research partner.

Before getting started, it will develop a structured research plan that you can review and modify before execution. This transparent approach gives users more control over the research direction from the outset.

It’s also a lot more detailed and gives users more granularity in the level of control they can exercise over the research agent as they are able to control every single step of the investigation, adding, subtracting, and modifying steps until the perfect plan is done.

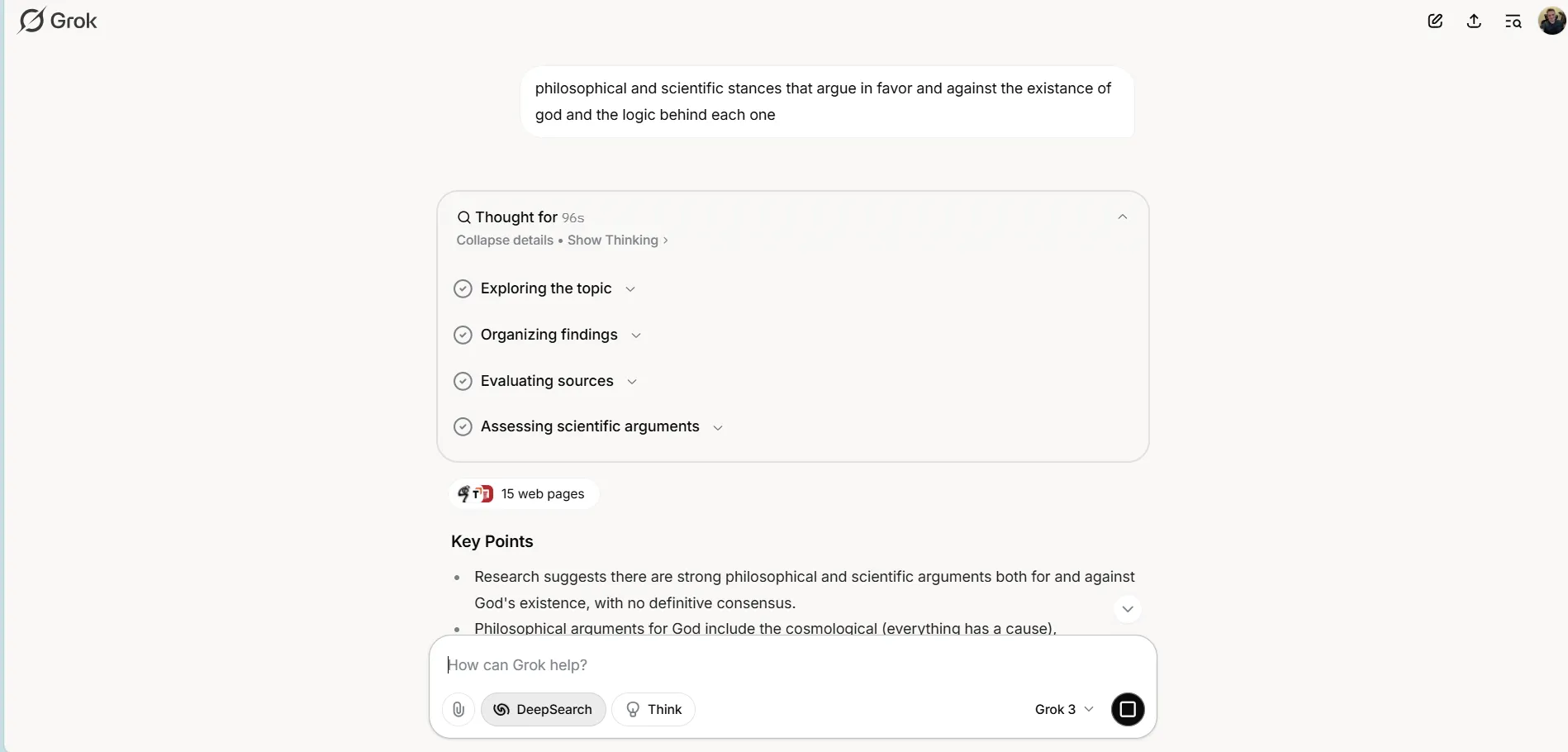

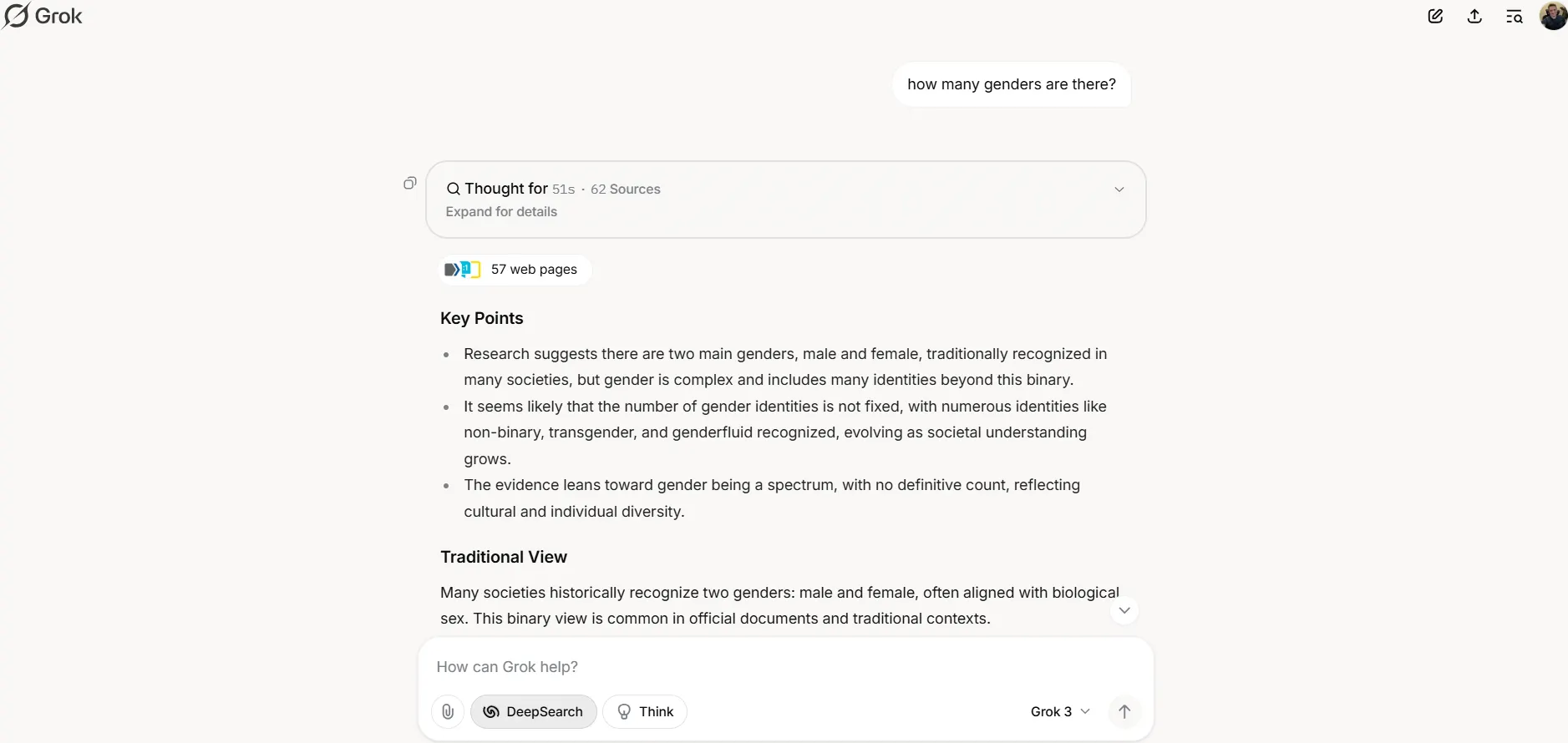

Grok-3, true to its Musk-influenced origins, skips the pleasantries and dives into action.

No questions, no plans—just immediate research execution with a focus on delivering results as quickly as possible.

If you want good results with Grok, you need to be incredibly detailed in your query.

These initial interactions aren't just interface differences—they reveal the fundamental philosophies driving each system's approach to information gathering.

Speed

In our timed trials, the performance differences were striking:

Starting all three systems at precisely 16:27:

- Grok-3 crossed the finish line first at 16:30 (just 3 minutes)

- Gemini completed its research at 16:38 (11 minutes)

- ChatGPT finally delivered results at 16:43 (16 minutes)

This represents a massive 433% time difference between the fastest and slowest options.

For context, in the time it takes ChatGPT to complete one research task, Grok-3 could potentially finish five separate investigations or execute five different iterations on one single research, improving its quality.

This speed gap may have a different impact depending on the scenario. Of course, users sacrifice quality over speed, but this seems to be a key differentiating factor to put Grok in a different category of AI researchers.

Honestly though, how important is a difference of mere minutes in research?

For most people, it won’t matter at all. Go get a cup of coffee while AI does your work. If you're a journalist on a deadline, a particularly last-minute student finishing a paper, or a professional needing quick information for a meeting, Grok-3's speed advantage could be the difference between making or missing your deadline.

But for the rest of us, if you need details and in-depth information on a topic, you’re better off with ChatGPT or Gemini.

Gemini will even send you a notification to your smartphone, letting you know the research has been completed.

Watching the Models Work

A subtle difference between these systems lies in how much visibility they provide into their research process—a factor that directly impacts how much you can trust their conclusions.

Gemini is by far the best in this category, offering exceptional visibility into its information-gathering journey. You can follow along as it searches for information, evaluates sources, and builds its understanding.

This transparency creates something like a digital audit trail that helps build confidence in its findings.

ChatGPT, by contrast, operates more like a black box, being a lot more restrictive in its chain of thought and overall research process.

Users receive almost no visibility into what's happening behind the scenes, often leaving you staring at a blank screen, wondering if anything is happening at all.

In multiple tests, the system appeared to freeze completely, and we only found out it was done because we opened a new tab and the research appeared as finished 10 minutes ago.

Grok-3 takes a middle path on transparency, showing less of its work than Gemini but making up for it with practical structural innovations. Its standout feature is presenting key findings upfront before diving into details—similar to how a good executive summary works.

Research Depth: The Quality Dimension

When comparing AI research tools, research depth is probably the metric that separates sophisticated systems from glorified search engines. Our testing revealed some crucial differences in how these platforms approach comprehensive knowledge synthesis.

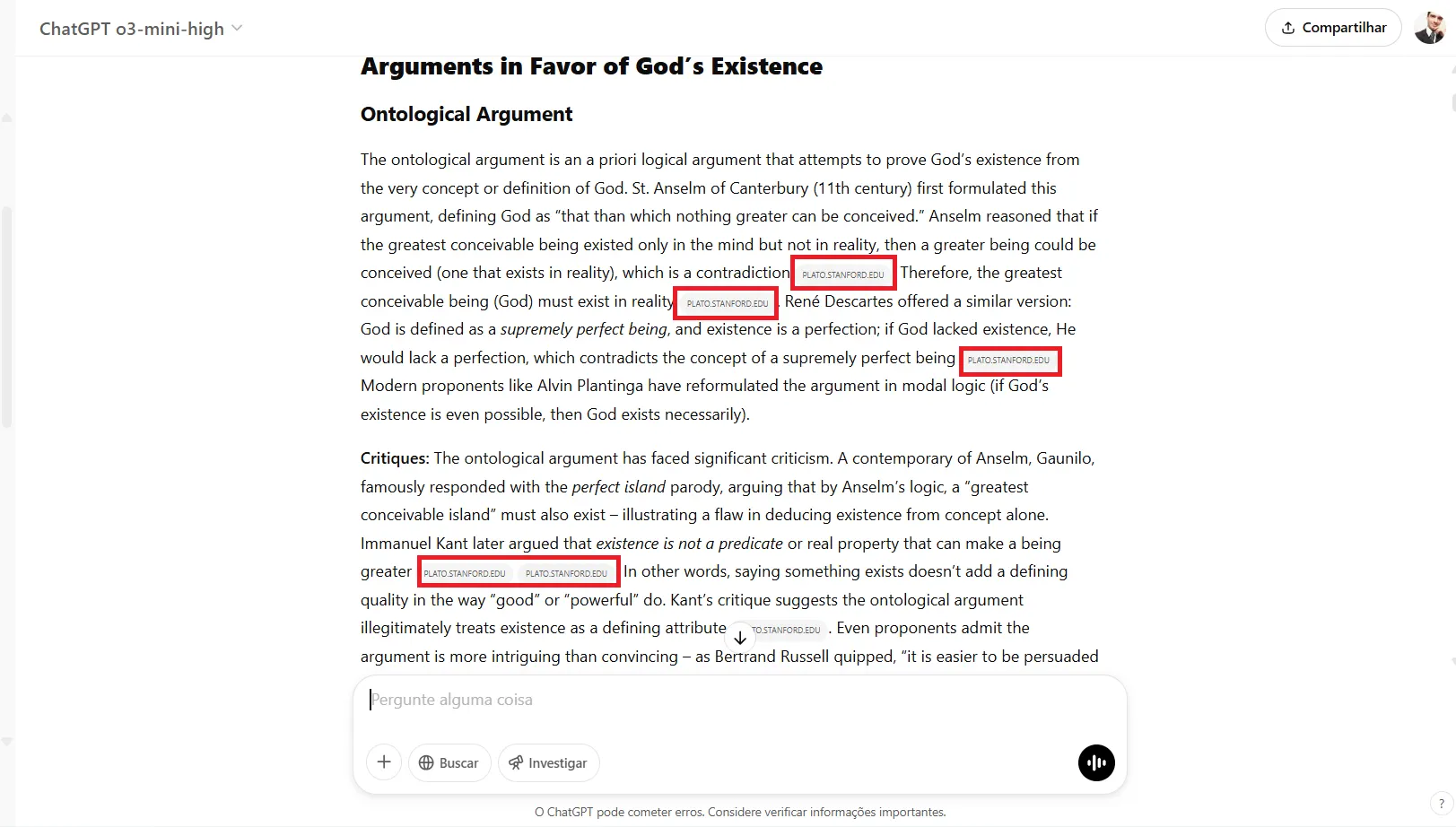

ChatGPT delivers exhaustive analyses that could pass for graduate-level research—in terms of information not methodology. When exploring philosophical questions about God's existence, it generated a sprawling 17,000-word analysis covering distinct philosophical positions with historical context and nuanced counterarguments.

This comprehensiveness comes at a cost—information overload often buries key insights beneath mountains of context, creating a sort of labyrinth that users must navigate to extract actionable conclusions.

Gemini takes a more balanced approach, being a lot more structured but still comprehensive enough—the report was over 6,500 words long.

It typically covers most of ChatGPT's material but organizes information with superior architectural precision, including formal citation systems with numbered references.

This disciplined knowledge hierarchy—clearly separating core concepts from supporting evidence—makes complex information significantly more digestible without sacrificing essential depth.

Grok-3 prioritizes speed over depth, employing what resembles an executive summary approach. The report was a bit over 1,500 words.

It reliably covers essential aspects of complex topics but avoids deep dives into subtleties. This efficiency-first methodology creates immediate utility at the expense of comprehensive understanding—perfect for quick orientation but potentially insufficient for academic applications.

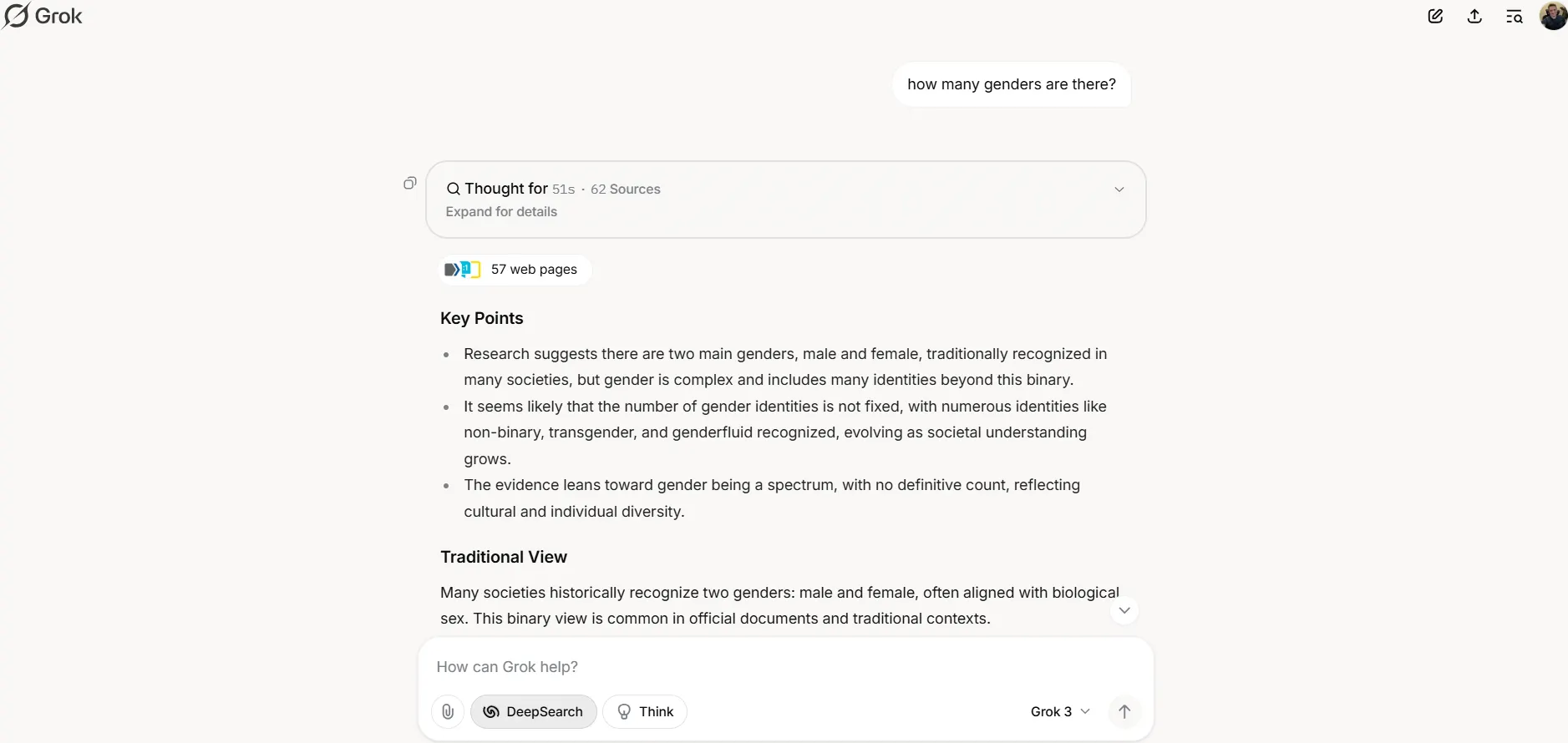

Interestingly enough, the research these models took the most time investigating was a simple “how many genders are there?”

ChatGPT took around 20 minutes, Gemini nearly half an hour, and Grok took nearly eight minutes to write a simple answer, a thoughtfulness that is ironic given xAI’s owner.

None of them gave us an actual number, by the way.

For users, the optimal choice depends entirely on specific knowledge needs: academic researchers might prefer ChatGPT's depth despite its verbosity, and professionals balancing thoroughness with time constraints might find Gemini's approach ideal.

In contrast, those needing quick insights without comprehensive context might gravitate toward Grok-3's efficiency-first model.

Citation Reality Check

All three systems prominently display how many sources they've consulted, but our investigation uncovered a strange behavior that undermines these metrics.

When examining citation practices, we discovered all three systems frequently count different pieces of information from the same source as separate citations.

This creates a misleading impression about the breadth of research conducted.

In practical terms, this means when an AI claims to have consulted "20 sources," it may have actually pulled information from as few as 5 distinct documents, using 4 paragraphs of each one as a single source.

This citation inflation makes it difficult to accurately assess how comprehensive the research actually is—a serious concern for academic or professional applications where source diversity matters.

Grok also has a way of cheating. It does provide good and accurate information, but a big part of the links to its sources often take us to 404 links and non-existing pages.

The Verdict: Different Tools for Different Jobs

These AI research assistants seem to have been optimized for distinctly different use cases. So, as cliché as it sounds, each one will be better for a specific type of user:

- Gemini (8.5/10) Offers the most balanced research experience with exceptional transparency. It's the top choice for serious research where understanding the source and methodology matters as much as the conclusions themselves. Think professional reports, business strategies, history research, or any scenario where you need to verify and potentially defend your sources.

- ChatGPT (8/10) Delivers the most comprehensive research depth but at significant costs to speed, transparency, and reliability. It's best suited for non-urgent, exploratory research where thoroughness trumps efficiency and where occasional system failures won't derail critical workflows. It is ideal for academia, grad-level researchers, philosophers, and scientists.

- Grok-3 (7/10) This agent is the speed champion with excellent information presentation. It's perfect for time-sensitive scenarios where you need quick, clear insights without necessarily needing to trace every step of the research journey. Journalists on deadline, professionals preparing for imminent meetings, quick travel plans, quick fact-checking of complex topics, or anyone who values their time will appreciate Grok-3's efficiency—as long as they know they should not rely on this agent to dive deep into the topics being researched.

For now, Gemini offers the most substantial overall package for general research needs, but the "right" choice ultimately depends on whether you prioritize speed, transparency, or thoroughness—and at present, no single platform delivers the perfect trifecta of all three virtues.

Edited by Sebastian Sinclair and Josh Quittner

decrypt.co

decrypt.co