It took almost nine years for Bitcoin to hit $10,000 for the first time, and a little over 12 years to crack $50,000. McKinsey argues patience will be needed with tokenization, too.

There’s been no shortage of excitement about how the tokenization of real-world assets can change the world as we know it.

One report by 21.co, published last October, enthusiastically predicted that this market will be worth $10 trillion by 2030 — and a still-impressive $3.5 trillion in the worst-case scenario.

This has been coupled with dizzying amounts of experimentation and fundraising, with tokenization platforms raking in millions of dollars so they can expand.

But according to McKinsey, a sobering reality check is needed.

While the consulting firm’s analysts agree that this technology will revolutionize financial institutions, transform the investing experience and make trading a lot cheaper, it believes there’s a real danger of the tokenization sector running before it can walk.

“There have been many false starts and challenges thus far.”

McKinsey

Bursting the bubble of keen analysts who believe that every single stock and fund on the planet will be tokenized in the blink of an eye, McKinsey questioned how much traction this fledgling sector can achieve between now and the end of the decade, which is just six years away.

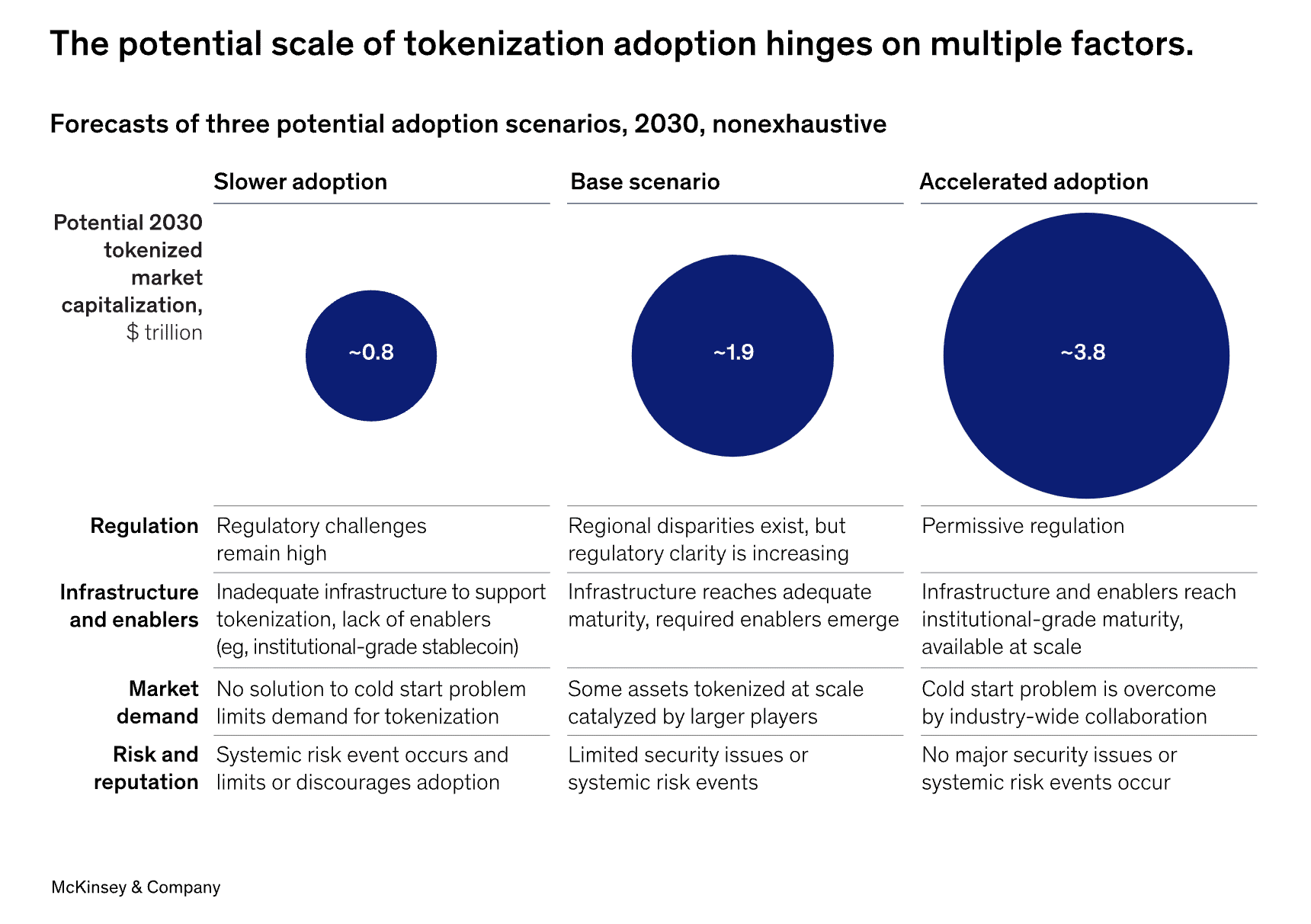

“Based on our analysis, we expect that total tokenized market capitalization could reach around $2 trillion by 2030 … in a bullish scenario, this value could double to around $4 trillion, but we are less optimistic than previously published estimates.”

McKinsey

In the most pessimistic scenario set out by the authors, it’s argued that the tokenization sector could be worth as little as $1 trillion — less than Bitcoin’s current market cap.

This isn’t McKinsey trying to dismiss this technology as a fad, it’s a frank acknowledgement that adoption takes a while. It took almost nine years for Bitcoin to hit $10,000 for the first time, and a little over 12 years to crack $50,000.

And in setting out the challenges that tokenization faces in gaining wider momentum — and achieving an all-important “network effect” — McKinsey says that some of the challenges likely to face early adopters include:

- Limited liquidity, with disappointing transaction volumes failing to deliver a robust market

- Parallel issuance on old-fashioned platforms leading to greater expense

- Long-established processes being disrupted

Because of all this, tokenization faces an additional challenge to reach its full potential: proving that it’s better than the current way of doing things. Using bonds as an example, the report says:

“Overcoming the cold start problem would require constructing a use case in which the digital representation of collateral delivers material benefits — including much greater mobility, faster settlement, and more liquidity.”

McKinsey

Three other potential hurdles are also worthy of discussion.

As McKinsey notes, upgrading the systems relied upon by the financial services sector to move trillions of dollars in value — some of which are many decades old — will take time, needs to be done with great care, and will require some degree of uniformity.

Regulators will also need to have their say — and as we’ve seen in the digital assets space, they can be fairly slow to take action with nascent technologies.

There also needs to be a serious discussion about whether blockchains are up to the job. Scalability has long been a lingering concern for the biggest networks, but Layer 2s have started to meaningfully pick up the slack. Blockchains are also prone to fragmentation, creating silos where they’re unable to communicate with one another. While bridges have been touted as a potential solution here, they’re not without security problems — and have suffered from some audacious multimillion-dollar hacks over the years.

McKinsey’s overall argument is this: tokenization is inevitable, and the benefits will be significant. It imagines a world where businesses can make payments 24 hours a day, with fairer terms for investors and much-needed modernization in financial products. However, proponents are losing sight of the fact that Rome wasn’t built in a day.

“Consumer technologies (such as the internet, smartphones, and social media) and financial innovations (such as credit cards and ETFs) typically exhibit their fastest growth (over 100 percent annually) in the first five years after inception.”

McKinsey

And while the rewards for early movers can be great — especially if they create a tokenization platform that becomes a market leader — there’s a risk they could end up being usurped, and rendered obsolete, by a later start-up with slicker technology, meaning that countless millions of dollars in investment goes down the drain.

In the meantime, set a reminder for 2030. When it comes to the total value of the tokenization market, 21.co and MicKinsey can’t both be right. However, they can both be very wrong.