In a burgeoning technology scene dominated by giants like OpenAI and Google, NExT-GPT—an open source multimodal AI large language model (LLM)—might have what it takes to compete in the big leagues.

ChatGPT took the world by storm with its ability to understand natural language queries and generate human-like responses. But as AI continues to advance at lightning speed, people have demanded more power. The era of pure text is already over, and multimodal LLMs are arriving.

Developed through a collaboration between the National University of Singapore (NUS) and Tsinghua University, NExT-GPT can process and generate combinations of text, images, audio and video. This allows for more natural interactions than text-only models like the basic ChatGPT tool.

The team that created it pitches NExT-GPT as an "any-to-any" system, meaning it can accept inputs in any modality and deliver responses in the appropriate form.

The potential for rapid advancement is enormous. As an open-source model, NExT-GPT can be modified by users to suit their specific needs. This could lead to dramatic improvements beyond the original, much like what happened with Stable Diffusion versus its initial release. Democratizing access lets creators shape the technology for maximum impact.

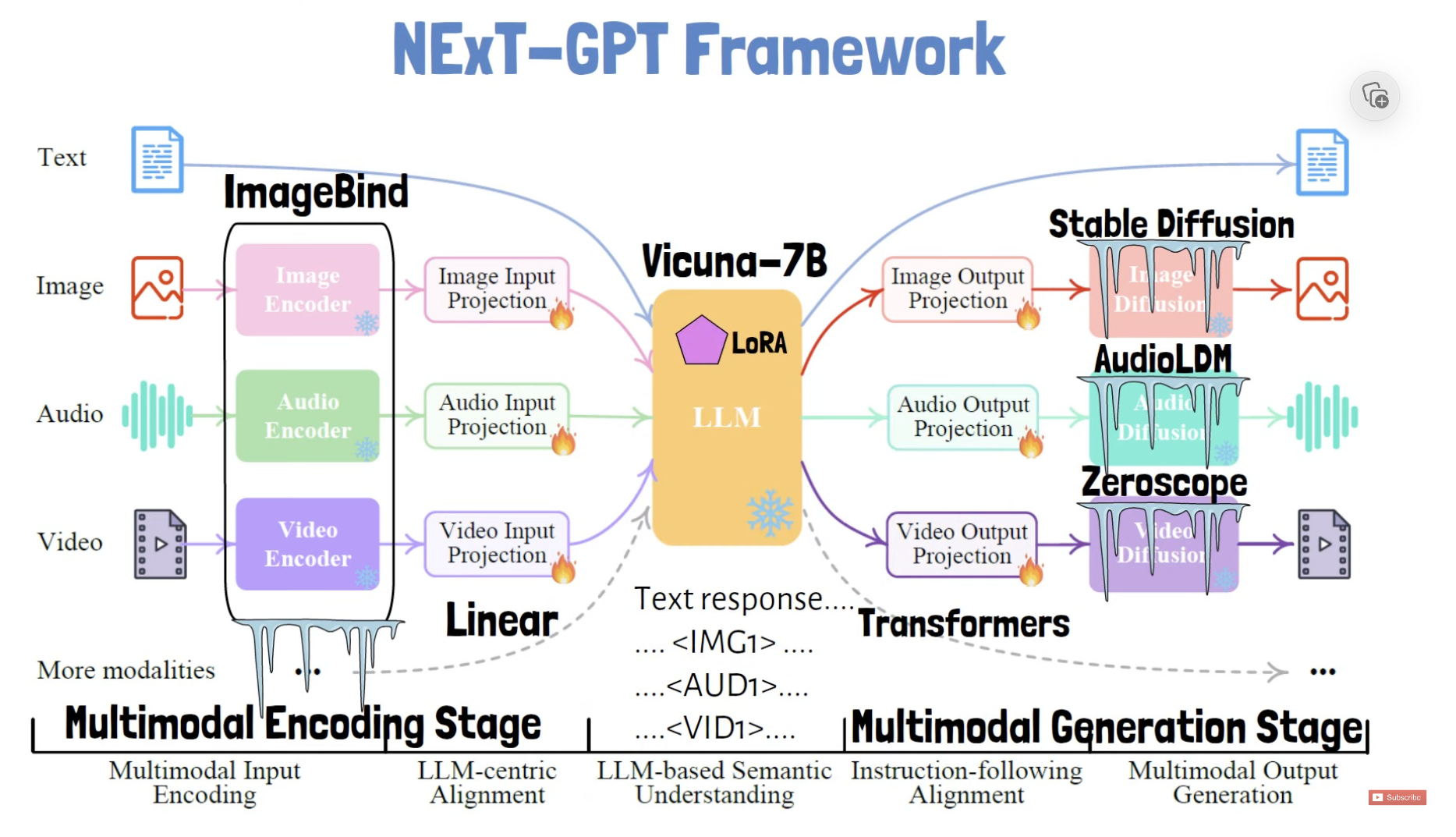

So how does NExT-GPT work? As explained in the model’s research paper, the system has separate modules to encode inputs like images and audio into text-like representations that the core language model can process.

The researchers introduced a technique called "modality-switching instruction tuning" to improve cross-modal reasoning abilities—its ability to process different types of inputs as one coherent structure. This tuning teaches the model to seamlessly switch between modalities during conversations.

To handle inputs, NExT-GPT uses unique tokens, like for images, for audio, and for video. Each input type gets converted into embeddings that the language model understands. The language model can then output response text, as well as special signal tokens to trigger generation in other modalities.

A token in the response tells the video decoder to produce a corresponding video output, for example. The system's use of tailored tokens for each input and output modality allows flexible any-to-any conversion.

The language model then outputs special tokens to signal when non-text outputs like images should be generated. Different decoders then create the outputs for each modality: Stable Diffusion as the Image Decoder, AudioLDM as the Audio decoder, and Zeroscope as the video decoder. It also uses Vicuna as the base LLM and ImageBind to encode the inputs.

NExT-GPT is essentially a model that combines the power of different AIs to become a kind of all-in-one super AI.

NExT-GPT achieves this flexible "any-to-any" conversion while only training 1% of the total parameters. The rest of the parameters are frozen, pretrained modules—earning praise from the researchers as a very efficient design.

A demo site has been set up to allow people to test NExT-GPT, but its availability is intermittent.

With tech giants like Google and OpenAI launching their own multimodal AI products, NExT-GPT represents an open source alternative for creators to build on. Multimodality is key to natural interactions. And by open sourcing NExT-GPT, researchers are providing a springboard for the community to take AI to the next level.

decrypt.co

decrypt.co